MacTalk

October 2023

Upcoming Contact Key Verification Feature Promises Secure Identity Verification for iMessage

Apple relies on increasingly outdated notions of end-to-end security for your messages with other people. While the company has regularly applied fixes to iMessage and its Messages apps to improve security and privacy, it hasn’t kept up with industry lessons and innovations. Apple is promising to change one key (sorry) component of that this year with an optional feature currently available in developer betas of iOS 17.2, iPadOS 17.2, macOS 14.2, and watchOS 10.2. The company announced the process and timeline on 27 October 2023 on its Security Research blog.

The feature is called Contact Key Verification, and its does just what its name says: it lets you add a manual verification step in an iMessage conversation to confirm that the other person is who their device says they are. (SMS conversations lack any reliable method for verification—sorry, green-bubble friends.) Instead of relying on Apple to verify the other person’s identity using information stored securely on Apple’s servers, you and the other party read a short verification code to each other, either in person or on a phone call. Once you’ve validated the conversation, your devices maintain a chain of trust in which neither you nor the other person has given any private encryption information to each other or Apple. If anything changes in the encryption keys each of you verified, the Messages app will notice and provide an alert or warning.

Apple’s post is written for the security community, so it references numerous complex cryptography concepts. Since Contact Key Verification is aimed at only those people who have significant reason to believe that hostile parties with significant resources want to compromise their communications, let me give you three levels of explanation: the first is just a quick overview, the next provides more details; and the last digs more deeply into the underpinnings.

To be clear, few people need this level of messaging security. If you’re a journalist, public figure, human-rights advocate, politician, well-known financier, or other likely target of thieves, governments, or tech-savvy stalkers, Contact Key Verification’s additional level of safety might seem like a godsend. The rest of us can decide if we care enough about the remote possibility of impersonation in an iMessage conversation to put up with the extra verification step.

Just the Facts

When you first started using iMessage within Messages, Apple had your device set up encryption, a public portion of which is shared with Apple, which stores it on your behalf. That public portion of the encryption setup helps Apple create a secure connection whenever you have a Messages chat with blue-bubble friends.

With Contact Key Verification, you go an extra mile to detect and prevent impersonation: you and the person with whom you’re communicating verify your identities in Messages. You do this by sharing a numeric code that lets you manually establish cryptographic trust between your accounts and devices.

If anything breaks that cryptographic trust, the Messages app becomes aware of this—no involvement by Apple is necessary. Messages then notifies you, the other party, or both of you with a message that explains why, and it suggests actions you can take now that you can no longer trust the connection.

High-Level Tour of the Contact Key Verification Process

Let’s look at each step of how Contact Key Verification works in a little more detail.

- Currently, all iMessage conversations are secured end-to-end by having one of your devices initially create a public-private key pair. It retains, secures, and syncs the private key among your devices and shares the public key with Apple. Apple maintains the public key in a key directory service that enables it to connect iMessage participants.

- With Contact Key Verification, your devices generate anotherencryption key that’s only synced securely among your devices and not shared with Apple in any way. That new key is used to validate your previously provided public key through a process known as signing. Your device sends Apple the signed message, which reveals nothing of the original key. Apple now has your public key and a separate verifiable signature that can be used to verify whether the public key has changed in Apple’s key directory service.

- To use Contact Key Verification, Apple will let you and another party confirm a short numeric code with each other to verify each other’s identities in an iMessage conversation. (Other messaging apps, like Signal and WhatsApp, offer a similar verification option.)

- This verification step of confirming a numeric code is cryptographically strong because it doesn’t transmit any private information between you. Plus, Apple isn’t party to the verification process—it doesn’t involve Apple servers, mediation, or storage.

- However, Apple does play a role in verification. The company will continuously publish a cryptographically scrambled and anonymized list containing iMessage user public keys and the signed component that’s delivered whenever anyone enables Contact Key Verification. This list is generically called a transparency log. Apple has already started such a log, generating more than two billion entries per week! (Apple says in its blog post that WhatsApp has already launched a similar log.)

- When anyone who has opted into Contact Key Verification communicates with someone else using iMessage, both parties’ devices independently consult the public transparency log to ensure that neither the log nor the keys they exchanged have been compromised. During this process, the devices reveal nothing about their private or local encryption keys to Apple (or anyone else).

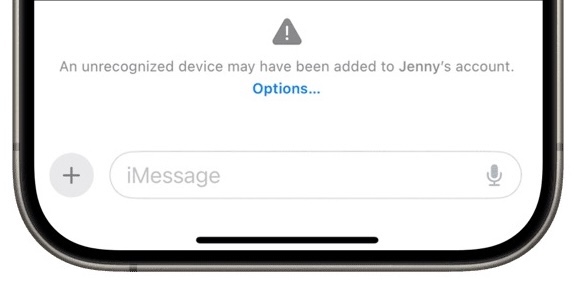

- Messages warns you when anything unusual or dangerous happens, such as another device being added to the other person’s account without proper validation, a key failing to match a previously verified one, or your contact using a key that’s outdated compared to the updated information on the transparency log.

As a practical example of what could happen, suppose some government intelligence agency has identified reporters, politicians, or others communicating over iMessage with human-rights advocates, spies, or other people. The agency’s goal is to impersonate the former parties to gain the trust of the latter and discover their location, the people with whom they communicate, and other confidential information.

Let’s say the agency breaks into Apple’s key directory service and manages to replace the public keys of the former group with ones under their control without Apple noticing, or finds an exploit that lets them fool copies of Messages into accepting alternative public keys. (These scenarios are extremely unlikely, but Apple admits that it’s not impossible to subvert the delivery of public keys from a central source.)

Without key verification, if such an exploit were carried out, intelligence agents could communicate with people in the latter group using suborned accounts, and iMessage wouldn’t notice. That’s because the end-to-end connection would switch to the new key associated with the contact, a normal occurrence when public keys change, and the conversation would continue. The reporter or politician would have no idea that they were actually talking to intelligence agents.

However, with Contact Key Verification in place, while the public key could ostensibly be replaced without notice, the signature associated with that public key could not be changed. Messages would try to validate the information using the public transparency log, determine the key had changed without a new one-to-one validation step, and throw a warning.

Background: It’s All about the Key Directory Service

The true purpose of Contact Key Verification is to provide security underpinnings to an online interaction. Apple isn’t helping you verify that the other person is the person you think they are. That’s entirely on you! You have to know who they are, know their phone number or email address, and potentially know things about them that couldn’t be learned online.

When you deploy Contact Key Verification with someone you already know, you upgrade an existing conversation from “I think I know this person” to “I know this person, and we now have an out-of-band encryption verification step to keep our conversations secure and tamper resistant.”

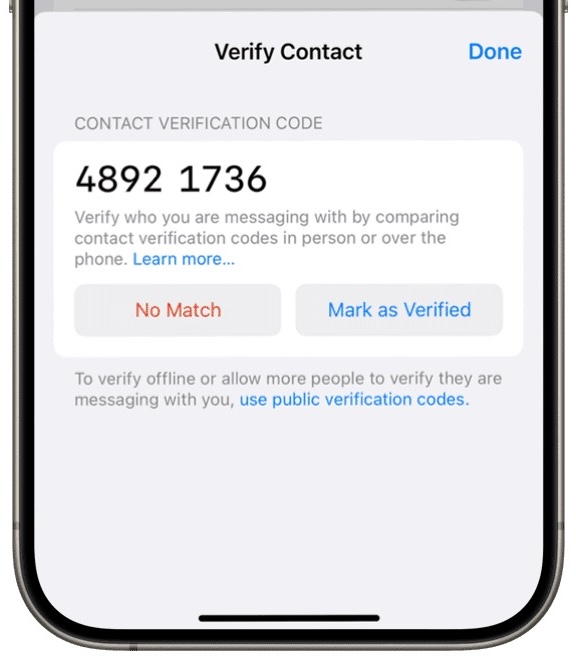

All you have to do is pull up an existing conversation and then use some trusted method to read the provided code, as you can see below. If the code matches, you each tap Mark As Verified.

You want to exchange this number through a method other than Messages: all Messages communications are in-band (using the same pathway as the one you’re trying to secure), and you want a separate out-of-band pathway that can’t be subverted. Security experts typically recommend you do this in person or by secure end-to-end video where you can see each other (FaceTime, or Zoom with its end-to-end option enabled). You should also be able to rely on a non-secured voice call, but you may want to have established some answers or code words to eliminate the possibility of an attacker using an AI voicebot to fool you—with a sufficient sample of the target’s speech, they’re pretty good.

The goal of these out-of-band methods is to confirm that the other person retains control of their Apple ID and devices; the verification process aims to confirm who has the account password and second factors.

If the numbers don’t match in the above process, but you’re sure you are each the person the other believes you to be, something bad has happened: possibly some sort of man-in-the-middle attack between the two of you or manipulation of Apple’s key server or transparency log. At that point, you would need to contact someone with authority to figure out what has happened: that might be an employer, a trusted person at a non-government organization like Citizen Lab, Apple, or the FBI, depending on who you and the other person are, and what kind of private information you planned to exchange.

In nearly every case, however, the validation phase of Contact Key Verification should just work—the chance that the security of Messages or Apple’s key directory service has been breached is extremely low. The mere existence of Contact Key Verification may deter advanced attacks that would now fail because they’d be noticed.

Also, because this system is predicated on previous acquaintanceship and an existing iMessage conversation, it can’t be subverted by another party pretending that they have the verification code you read. If they say, “Yes, my code matches,” when yours is “1234 5678” and theirs is “8765 4321,” and you tap Mark as Verified—it gets them nothing. If they’re in the same conversation, the code will be identical, no matter how they gained access to it; if they’re not in the same conversation with you, the code won’t match, and they can’t use your reading of the code to their benefit. In fact, the verification code can also identify when something has already gone wrong and provides a starting point for monitoring future changes.

Looking at it another way, this explains why Apple notes someone with a “public persona” could post a verification code online without risk. When someone wants to start an iMessage conversation with a public figure, they rely on that public element as proof that the public figure is who they say they are. Further, because Apple associates their public key with the email addresses and phone numbers associated with their Apple ID account, someone contacting them and verifying their code could only face an imposter if the hijacker had taken over the public figure’s Apple ID account.

Contact Key Verification is a solution to an issue known for years. Way back in 2016, security researcher Matthew Green explained several of iMessage’s fundamental design problems, with one of the worst being “iMessage’s dependence on a vulnerable centralized key server.” (Another was Apple’s failure to publish the iMessage protocol, which remains a concern. I wrote a Macworld column about these failings in 2016.)

Apple bluntly agrees in the blog post that its iMessage system is vulnerable to such attacks:

While a key directory service like Apple’s Identity Directory Service (IDS) addresses key discovery, it is a single point of failure in the security model. If a powerful adversary were to compromise a key directory service, the service could start returning compromised keys — public keys for which the adversary controls the private keys — which would allow the adversary to intercept or passively monitor encrypted messages.

Let me dig into the key directory service—Apple’s Identity Directory Service—a little more. In iMessage conversations, your devices generate unique, private encryption keys that Apple never has access to—these keys are never transmitted to Apple. Since iMessage launched, with its secure end-to-end encryption, the first device you logged into iCloud using your Apple ID account created a locally held pair of public-private keys. As you add new devices to your iCloud account, each new device performs a key exchange with the other devices in your iCloud set so that each one can encrypt and decrypt messages.

You may have been baffled by this key exchange because Apple explains it poorly. When one of your devices says you need to enter the passcode/password of another of your iCloud-connected devices and the passcode/password won’t be transmitted to Apple, you’re seeing the key exchange process. When you add a device to your iCloud set, you have to enter a secret—the passcode or password—known only to you about another device in your existing iCloud set. That other device has encrypted various key information using its passcode or password (among other information).

As a practical example, let’s say you have an iPhone and a Mac logged into iCloud when you buy a new iPad. When you log in to iCloud on your iPad, after the home screen appears, you should be prompted to enter the passcode for your iPhone or the login password for the account on your Mac associated with that Apple ID. When you do, that enables your new iPad to decrypt the information associated with your iCloud account, giving it access to Messages in iCloud data, iCloud Keychain information, and other encrypted details. Your iPad then encrypts its unique keys and shares them over iCloud with your other devices. This device-only key exchange process enables your devices to share unique key information about which Apple knows nothing.

For iMessage conversations, there’s another process. Apple’s Identity Directory Service stores the public part of a public-private key pair as part of an approach known as public-key cryptography used across all of Apple’s end-to-end encryption systems. Public-key cryptography, widely used across Internet and computer systems, allows people to exchange securely encrypted data without a previous relationship because the public key in the pair can be freely shared without disclosing any portion of the private key. The private key is used for decryption and cryptographically signing data to prove the initiator’s identity and that the data hasn’t been tampered with. Your device keeps and protects the private key, which never leaves the hardware; on Intel Macs with a T2 chip or any iPhone, iPad, or Mac with Apple silicon, it’s locked inside the one-way Secure Enclave. The public key confirms the identity of anything signed with the private key.

The weakness in public-key cryptography is that there’s no inherent part of the key-generation protocol that lets other parties validate who owns a given public key. That’s where infrastructure gets built. Back in the days of Pretty Good Privacy (PGP), keyservers abounded. Later, Keybaseoffered a more modern solution, but it still relied on a lot of trust. Now owned by Zoom, Keybase never achieved mass adoption. Apple’s Identity Directory Service leverages Apple’s control over every piece of the encryption software, device hardware, and server technology—and even then, Apple admits that security researchers are correct: it’s not enough for the highest degree of protection. This admission comes after Apple has seen many government-driven exploits of and via Messages through spyware—it even sued NSO Group over this (see “Apple Lawsuit Goes After Spyware Firm NSO Group,” 24 November 2021); the suit is slowly progressing through the courts.

To mitigate the weakness of a directory of unencrypted public keys provided by devices, Contact Key Verification adds an extra key, described earlier. This unique key, shared only among your iCloud device set, uses a one-way cryptographic process against your iMessage public key to produce a signature. That signature can’t be forged—one must have the original key, which exists only on your devices, to produce a signature that can be validated. (This is another reason why protecting physical access to your devices and their passcodes/passwords is so utterly important! Never share a passcode with anyone you wouldn’t trust with access to your bank account, and never enter a passcode in public; see “How a Thief with Your iPhone Passcode Can Ruin Your Digital Life” (26 February 2023) and “How a Passcode Thief Can Lock You Out of Your iCloud Account, Possibly Permanently,” (20 April 2023).

This signature is essentially cryptographically concentrated into the eight-digit code. Two people seeing the same eight digits provides the missing piece that closes the loop: their devices become the source of truth about the keys for those two people’s iMessage accounts, not Apple’s Identity Directory Service. If anything changes, each device knows enough to spot discrepancies in Apple’s Identity Directory Services or the transparency log.

Contact Key Verification goes a long way towards giving iMessage the best-in-class robustness that security researchers have been telling Apple is possible for some time. It may seem minor for everyday users, but it’s a giant leap forward for those whose livelihoods—or lives—could be threatened by compromised communications.

Contents

Website design by Blue Heron Web Designs